Source: https://www.internetexchangemap.com/

Source: https://www.internetexchangemap.com/

In the 1980s, there were only a handful of places in the public internet where internet service providers could connect with the public internet to pass data between each other and with their users. These places, known as internet exchange points, were located at the intersections of major fiber optic routes . As shown in the 2021 map above, the number of these locations has grown but remain centralized in areas of high population and fiber density. In addition, carriers have built private peering points to directly deliver traffic to one another. I’ll call internet exchange and private peering points collectively interexchange points (IXP). In a highly centralized cloud computing world, decisions on where to place IXPs were driven by economies of scale, fiber access, and the locations of the large cloud service providers. The time it took for a packet to move from one end of the network to another, i.e., end-to-end latency, was secondary. Because of this, latency-sensitive edge-native applications will not be able to deliver acceptable user experiences on today’s networks.

Client-Cloudlet Path

Client-Cloudlet Path

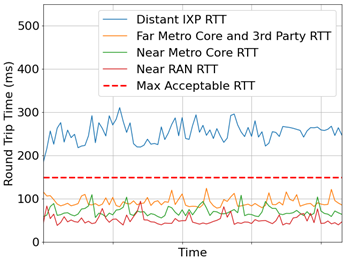

In edge computing, there are many scenarios where users and edge computing nodes (aka cloudlets ) need to interact even though they may be connected to different carrier networks. For example, data from a multiplayer game cloudlet will pass through one or more IXPs to reach players on different networks. If the IXP is in Phoenix when the players and cloudlet are in Pittsburgh, end-to-end latency can grow from 10s to 100s of milliseconds – rendering that game unplayable. Distant IXPs can be catastrophic to many edge-native applications.

In early 2020, the Open Edge Computing (OEC) Initiative established a workstream to investigate this IXP placement challenge. Using the Carnegie Mellon University Living Edge Lab and the Interdigital AdvantEDGE Mobile Edge Emulation Platform, we evaluated the impacts of different IXP locations on the performance of the OpenRTiST edge-native application. Some results of this work are shown in the diagram at left.

The work of this workstream is outlined in the OEC Whitepaper, “ How Close to the Edge? -- Edge Computing and Carrier Interexchange ”.

The business success of edge computing depends on addressing limitations imposed by the industry’s legacy approach to carrier interconnect. These limitations render many edge-native applications unusable in the many scenarios. Our results show that viable edge computing requires:

- Regardless of IXP location, edge computing “cloudlets” must be located in the same metro/region as the application users.

- Once metro cloudlets are deployed, IXPs must be established within the metro area and networks engineered to prevent user to cloudlet data paths outside of the metro.

- Within the metro area, the marginal performance benefit to moving IXPs closer to the user (e.g., to the cell tower) is small and may not justify cost.

- Metro third-party neutral host IXPs will provide equivalent performance to direct carrier-to-carrier IXPs with potentially lower complexity.

- While application performance is the main criteria for IXP placement decisions, other requirements like lawful intercept and data geofencing also need to be considered.

Given long planning and implementation cycles, we strongly recommend that carriers start immediately to enable low-latency edge computing by deploying metro based IXPs with other carriers.

For more information on this work, see the OEC Whitepaper, “How Close to the Edge? -- Edge Computing and Carrier Interexchange” or drop us a note at info@openedgecomputing.org . The inspiration for this work was the Tomasz Gerszberg LinkedIn blog from December 2019. A special thank you to InterDigital, Vodafone and VaporIO for their support of this workstream.

This research was supported by the Defense Advanced Research Projects Agency (DARPA) under Contract No. HR001117C0051 and by the National Science Foundation (NSF) under grant number CNS-1518865 and the NSF Graduate Research Fellowship under grant numbers DGE1252522 and DGE1745016. Additional support was provided by Intel, Vodafone, Deutsche Telekom, Crown Castle, InterDigital, Seagate, Microsoft, VMware and the Conklin Kistler family fund. Any opinions, findings, conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the view(s) of their employers or the above funding sources.

References

1. M. Satyanarayanan. “The Emergence of Edge Computing”. In: Computer 50.1 (2017), pp. 30–39.

2. The Open Edge Computing Initiative. https://openedgecomputing.org/

3. M. Satyanarayanan et al. “Cloudlets: at the leading edge of mobile-cloud convergence”. In: 6th International Conference on Mobile Computing, Applications and Services . 2014, pp. 1–9.

4. Tomasz Gerszberg. Shared Edge Experience. https://www.linkedin.com/pulse/shared-edge-experience-tomasz-gerszberg

5. M. Satyanarayanan et al. “The Seminal Role of Edge-Native Applications”. In: 2019 IEEE International Conference on Edge Computing (EDGE) . 2019, pp. 33–40.

6. Carnegie Mellon University. The Living Edge Lab . https://openedgecomputing. org/living-edge-lab/ and https://www.cmu.edu/scs/edgecomputing/index.html

7. Michel Roy, Kevin Di Lallo, and Robert Gazda. AdvantEDGE: A Mobile Edge Emulation Platform (MEEP). https://github.com/InterDigitalInc/AdvantEDGE.

8. S. George et al. “OpenRTiST: End-to-End Benchmarking for Edge Computing”. In: IEEE Pervasive Computing (2020), pp. 1–9. DOI: 10.1109/MPRV.2020.3028781.

Jim Blakley

Jim Blakley