IMAGE CREDIT: Pavan Trikutam -- Unsplashed

IMAGE CREDIT: Pavan Trikutam -- Unsplashed

In the mid-1980s, Bell Labs conducted a human-factors study to understand how telephone users conceptualized the telephone network. Within the switching engineering division, we thought of the network as a complex collection of switching, transmission and operation systems interconnected by a web of copper and fiber “outside plant”. But our customers, who only used the network to make phone calls, had a much simpler mental model – two telephones attached to an opaque cloud. Those two phones were nebulously connected to each other by dialing digits or speaking to an operator who resided somewhere in that cloud. The network itself was unimportant to users and, in their minds, had little impact on the quality of their experience. And, even if they wanted to know more, information about the network was not available to them. But this infrastructure opaqueness was not a major problem because Bell Labs engineers understood and could design for user experience metrics like dial tone wait times and voice quality.

In today’s world of mobile device applications accessing cloud and edge computing resources over mobile networks, infrastructure opaqueness persists but is compounded by application opaqueness . Old-fashioned telephone calling applications were limited and well understood. Today’s mobile applications like gaming, video conferencing and video surveillances are unbounded in number and diversity. They rely on multi-generational, multi-operator networks connected to multiple cloud and edge computing service providers. Mobile network designers and mobile application developers are faced with significant gaps in their knowledge and information about each other’s world just as those 1980’s telephone users knew little about the telephone network.

But, the user’s quality of experience depends on assuring acceptable application performance in the face of opaque and diverse network and compute performance. We see this firsthand when our video streaming service begins to “spin” waiting for download over a congested network. Content Delivery Networks, the first widespread use of edge computing, were overlaid on existing networks to address the spin problem. Standards like MPEG-DASH and HLS emerged to detect and mitigate bandwidth limitations in video delivery applications. General purpose edge computing is enabling applications well beyond video delivery but new tools and approaches will be needed to enable application developers to design for diverse network and computing environments and for network operators to design for the many applications that their networks must support.

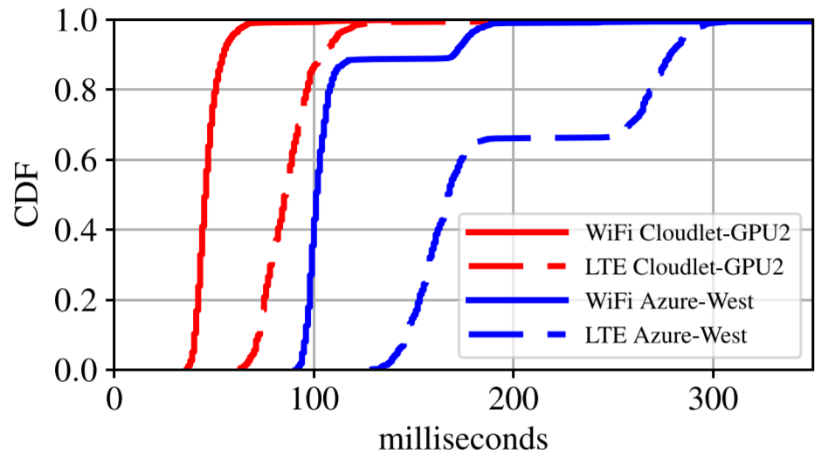

At the Living Edge Lab, we have been working on approaches that use benchmarking and simulation of edge-native applications in networks to understand the interplay between applications and their environments. This work starts from the premise that application experience, quantitatively measured at the user device, is key to understanding the value and limitations of the infrastructure that supports that application. Our work uses an instrumented version of the OpenRTIST edge-native application to collect key application experience metrics like framerate and end-to-end (E2E) latency in different network and computing environments. The first work , by Shilpa George, Thomas Eiszler, Roger Iyengar, Haithem Turki, Ziqiang Feng and Junjue Wang from Carnegie Mellon University and Padmanabhan Pillai from Intel Labs, measured the E2E application latency in a variety of physical edge and cloud-based environments and WiFi and LTE networks. As shown at left, this work found substantial differences in E2E latency depending on what infrastructure the application ran on.

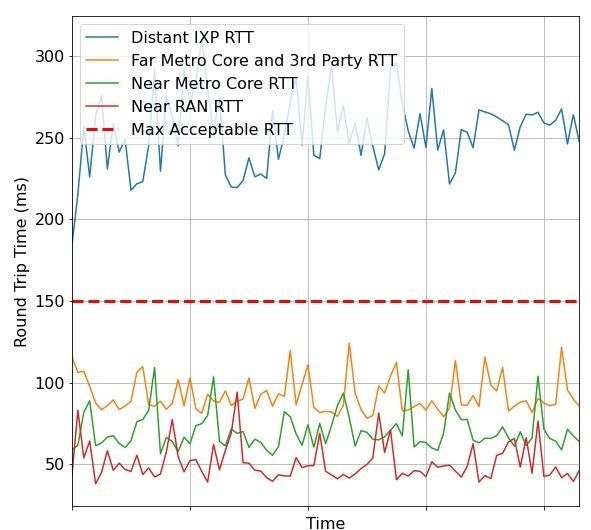

The second work by me, Roger Iyengar and Michel Roy from InterDigital, extended the first work by running a series of simulations on an environment built around the AdvantEDGE network emulator . These simulations varied the location and characteristics of users, edge nodes and network interconnect points while the application ran. We also looked at the impacts of network handoff, cell type and mobility on application performance. As an example, the figure below shows the impact on OpenRTIST round trip time and framerate at different simulated carrier interconnect points.

So far, this work focuses on a single user and a single, specific application but we do have some key learnings.

- For applications with high levels of user interactivity, low latency networking to “backend” computing is critical. In many of our experiments, connection to cloud infrastructure outside of our metro area caused unacceptable E2E latency of >150ms.

- Carrier interconnect points also caused similar E2E latency challenges. Without local metro carrier interconnect, application sessions from an LTE mobile device to an edge node on local wired carrier connected through an interconnect point outside the metro area resulting in the same 150ms+ E2E latency.

- When the network path stays within the metro area, E2E latency begins to be driven as much by application compute latency as from network latency. This effect is, of course, highly application specific. But it suggests that attention to the CPU and GPU compute resources available from edge nodes is important for experience acceptability.

Going forward, we’ll focus on diversifying the number and types of applications under study to include multi-user interactive, IOT and edge analytics applications. We also plan to test in more real and simulated network environments to further validate and extend our learnings.

This work shows the value of using application benchmarking and system simulation to better understand what’s behind the network and computing curtain imposed by infrastructure opaqueness. These are not the only tools developers will need to design and characterize their applications but they can provide key insights into application acceptability in the face of widely varying environments.

For more information on our work in this area, see the following.

REFERENCES

- S. George et al., "OpenRTiST: End-to-End Benchmarking for Edge Computing," in IEEE Pervasive Computing, vol. 19, no. 4, pp. 10-18, 1 Oct.-Dec. 2020, doi: 10.1109/MPRV.2020.3028781 .

- J. Blakley et al., “Simulating Edge Computing Environments to Optimize Application Experience”, Carnegie Mellon University Technical Report, CMU-CS-20-135, November 2020.

Jim Blakley

Jim Blakley