By Jim Blakley, Associate Director

Carnegie Mellon University Living Edge Lab

As the Covid-19 pandemic continues across the globe, the need for people to work separately from each other grows. And, the activities that they need to accommodate are not just endless Zoom meetings and online classes. In person, hands-on training has virtually stopped over the last 6 months. Hands-on instruction is used extensively in manufacturing, health care, construction, the trades and other professions to acquaint employees and customers with new equipment usage, product assembly, operational procedures and maintenance tasks. Travel restrictions, social distancing practices and work from home policies make it difficult for trainers to be physically present with their trainees and one-on-one remote training sessions are not scalable to large groups of trainees.

This situation has increased the motivation to apply augmented reality (AR), especially a category of AR we at Carnegie Mellon University (CMU) refer to as Wearable Cognitive Assistance (WCA), to the problem of hands-on remote training. Over the last several years, interest in AR head-mounted wearables like Google Glass, Magic Leap and Microsoft HoloLens has peaked and waned. As recently as August 2019, the Gartner Hype Cycle placed AR and head-mounted displays (HMD) deep in the “Trough of Disillusionment”. However, the current environment is rapidly pushing them up the “Slope of Enlightenment”. Indeed, the HoloLens 2 launch in late 2019, the Google Glass Enterprise Edition 2 general availability , the demo of Samsung’s AR glasses at CES 2020 and the rumored Apple Glasses launch in 2022 or 2023 indicate that companies continue to believe in these technologies.

In the CMU Living Edge Lab , we’ve been working on Wearable Cognitive Assistance since 2014 . WCA is a class of applications that provide automated real time hints and guidance to users by analyzing the actions of the user through an AR-HMD while they complete a task. The task can require very rapid response like playing table tennis, fine grain motor control like playing billiards or guiding a user through a process like assembling a lamp.

While the public conception of AR evokes thoughts of 3D monsters marauding through the real world, WCA focuses on providing a simple “angel on your shoulder” to impart the minimal information needed to allow you to complete your task with the least distraction. Forbes has outlined many commercial WCA application examples from a variety of industries.

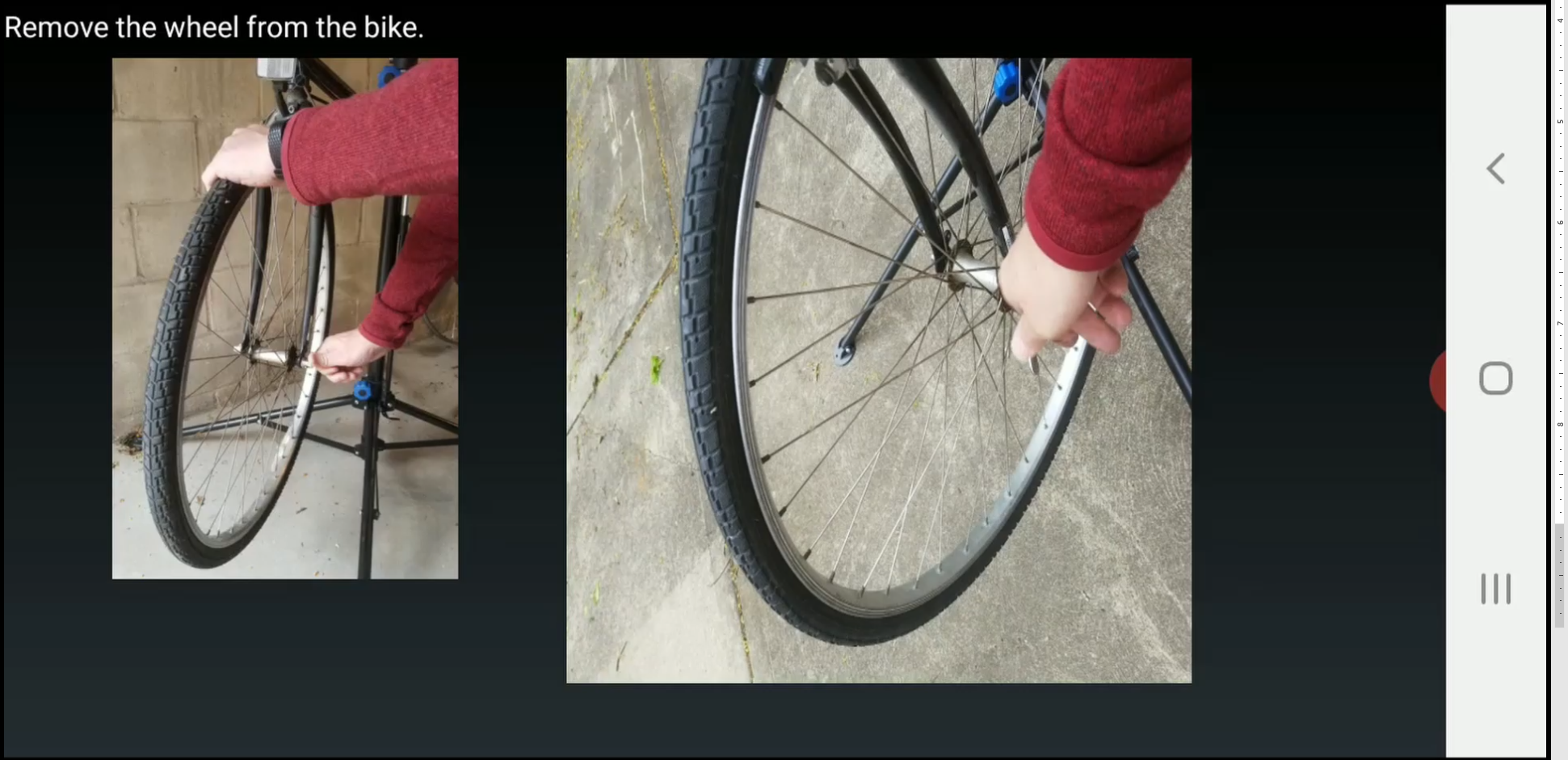

As one of my first tasks when I joined CMU earlier this year, I created a WCA application to change a bicycle flat tire (video).

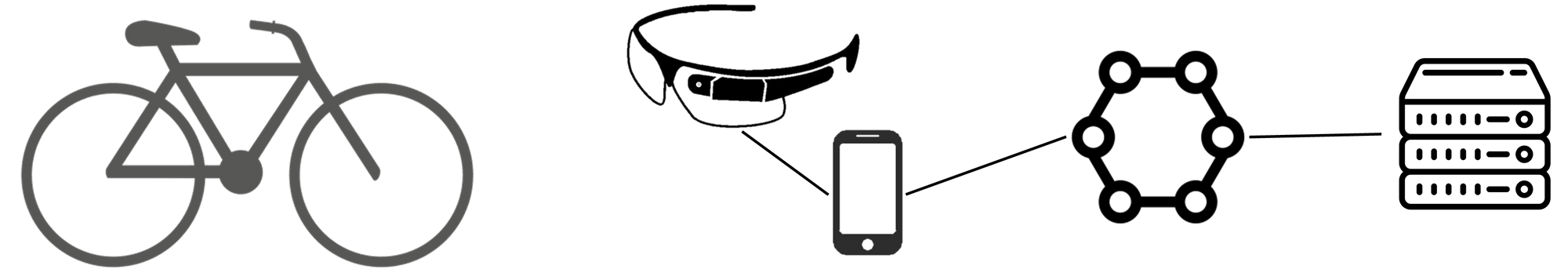

The basic architecture of this system is shown below.

While the application is a toy, it points out a number of the critical requirements for creating and using a WCA solution.

- Edge Computing – In my neighborhood, the transmission of video frames from the AR-HMD over a mobile wireless network to a public cloud-based object detector can take between 100-250 milliseconds. For applications like this, that delay and jitter makes the experience feel slow and choppy. For my work, I used a private WiFi network to get acceptable latency but that isn’t practical for many mobile applications and nearby low latency “cloudlets” will be required.

- Application Specific Image Detector – In “FlatTire”, the cloudlet needs to recognize the key objects in the video from the client – bicycle wheels, pumps, forks, tire levers, tubes and tires. Since these are not commonly found objects in general datasets, I needed to capture and label a training set and train a detector to recognize each of these objects.

- Workflow Execution Engine – Each WCA has unique application-specific steps to execute and ways that the system or user can fail to execute correctly. I needed to design an application state machine to define the workflow that the user experiences. Frankly, since this was only a toy, I skipped most of the error paths but those are often the most difficult to exhaustively identify and handle.

- A Client Application – To close the loop between the user and the application, the client forwards video frames to a remote object detector and relays instructions from the workflow engine verbally and visually to the user. We created the Gabriel platform to make this easy and general purpose. More about Gabriel below.

- “Wearable” AR-HMD – Since I didn’t have access to an AR-HMD, I implemented my client application wholly on a smartphone. You can see the awkwardness the lack of hands-free operation has in the video link above– look closely at the tire inflation step .

Within the WCA research space at CMU, we focus on two main questions:

- How do application developers create WCA applications and what tools are needed to make this easy and repeatable? Over my next several blogs, I will go deeper on open source platforms we’ve created for this purpose.

- How can edge computing make WCA applications more useful and usable? In the future, I’ll talk more about the applicability of edge computing to WCA and other classes of applications.

To address part of the application creation problem, we started development of the Gabriel platform in 2013 and just released version 2.0 in July 2020. Gabriel is a device-to-edge WCA application framework that provides libraries for Python and Android, as well as a cloudlet-based server. It offers network abstractions for transmitting sensor data (such as images) from clients to a cloudlet. After the cloudlet processes data, it sends results or feedback to the client using Gabriel’s abstractions. The client can then provide instructions to the user with textual displays or audio feedback.

Gabriel was originally developed by then CMU PhD. student Zhuo Chen, significantly evolved by recent CMU PhD. Junjue Wang and is now being enhanced by current CMU PhD student Roger Iyengar. It is fully open sourced under an Apache 2.0 license and available on github. Gabriel 2.0 uses Python 3, asyncio, WebSockets, ZeroMQ, and Protocol Buffers. It also implemented flow control to support running multiple cognitive engines in parallel on the same sensor stream. Work on Gabriel 3.0 is now underway. Our on-going focus is adding the ability to fall back to a human agent in cases where automated assistance fails.

Next Up – OpenTPOD , for fast creation of WCA object detectors, and OpenWorkFlow , for the specification of WCA workflow state machines.

For a more thorough introduction to Wearable Cognitive Assistance see Augmenting Cognition Through Edge Computing . To try out Gabriel and contribute to its evolution, check it out on github . For demos of more WCA applications, see this YouTube playlist and the videos below.

© 2020 Carnegie Mellon University

Publications

- " Augmenting Cognition Through Edge Computing ", Satyanarayanan, M., Davies, N. IEEE Computer, Volume 52, Number 7, July 2019

- " An Application Platform for Wearable Cognitive Assistance ", Chen, Z., PhD thesis, Computer Science Department, Carnegie Mellon University., Technical Report CMU-CS-18-104, May 2018.

- ‘‘ An Empirical Study of Latency in an Emerging Class of Edge Computing Applications for Wearable Cognitive Assistance ’’, Chen, Z., Hu, W., Wang, J., Zhao, S., Amos, B., Wu, G., Ha, K., Elgazzar, K., Pillai, P., Klatzky, R., Siewiorek, D., Satyanarayanan, M. , Proceedings of the Second ACM/IEEE Symposium on Edge Computing, Fremont, CA, October 2017

- " Towards Wearable Cognitive Assistance ", Ha, K., Chen, Z., Hu, W., Richter, W., Pillai, P., Satyanarayanan, M., Proceedings of the Twelfth International Conference on Mobile Systems, Applications and Services (MobiSys 2014), Bretton Woods, NH, June 2014

- “ Scaling Wearable Cognitive Assistance ”, Junjue Wang PhD. Thesis, May 2020

Videos

- IKEA Stool Assembly: Wearable Cognitive Assistant (August 2017)

- RibLoc System for Surgical Repair of Ribs: Wearable Cognitive Assistant (January 2017)

- Making a Sandwich: Google Glass and Microsoft Hololens Versions of a Wearable Cognitive Assistant (January 2017)

For all papers, publications, code and video from the CMU Edge Computing Research Team see: http://elijah.cs.cmu.edu/